Is Apple’s New Lidar Sensor a Game-Changer for AR Apps?

Any time that there is a big tech release from Apple, users and journalists always center their attention on aspects of the gadget like size, pixel density, performance, and new features.

These characteristics are all important in their own right, but some smaller innovations tend to get overlooked and fall through the cracks.

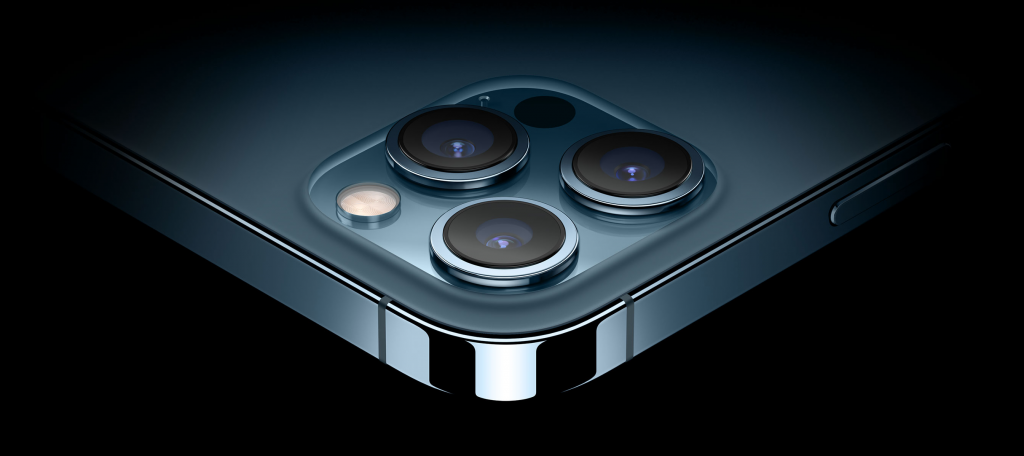

This is what almost happened to the Lidar sensor in the iPhone 12 Pro. Visually, it is presented as a small black circle in the bottom corner of the phone’s camera unit, and you could easily mistake it for another lens or a quixotic flash panel. On the contrary, Lidar is something entirely different, and this small sensor has big implications for the future of AR.

What is Lidar?

Lidar is an acronym of sorts, standing for “light detection and ranging”. The technology centers around infrared imaging and light, with many tiny pulses being sent out and being registered when their reflections bounce back. It’s like sonar, except the waves being registered by the device aren’t acoustic, but rather light-based. By measuring the time and angles of the reflections, the Lidar device creates a 3D spatial image of how the targeted area looks.

Before we get ahead of ourselves in praising Apple’s innovative genius, we should mention that Lidar has been around for decades and is essentially 3D laser scanning, just under a different name. Still, Apple is the first major tech company to make the technology accessible to millions of consumers – first in the 2020 variants of the iPad Pro, and now with the iPhone 12 Pro.

Why this is important for AR

If you are familiar with augmented reality apps, you have probably come across those that project digital models on real-life surroundings by analyzing objects and surfaces and determining where the model could realistically be placed. Some of these apps are good at spatial accuracy, but none are perfect, and the built-in algorithms can be easily deceived with poor lighting or unusual textures. Lidar makes augmented reality much more accurate by relying on extensive sensor data instead of computed projections.

The impact of Lidar in real life

Any time that new tech comes out, it is often hyped up to be “the next big thing”, but consumers and enterprises can make a more accurate judgment call by looking at its real-life impacts, and not just marketing bluster. Here are some examples of how Lidar is already improving AR:

1. Easy measurements

Would you trust an app to make measurements of your home and its interior elements? If you answered no, you probably haven’t heard of Canvas. This mobile app makes the most of the Lidar sensor and takes measurements of home interiors (rooms, beds, accessories, etc.). It might not be accurate to the point of fractions of an inch, but it can probably save you from getting a tape measure in most cases when you need a measurement estimate.

2. Accurate digitizing of real objects

When you want to carry images of real objects over to the digital plane (e.g. for a presentation), the process can be a real hassle. First, you take the photo, then open it in an editor, cut along the edges of the targeted object, and only then carry it over. However, with the ClipDrop app that applies Lidar, you can “extract” objects from their backgrounds without having to manually trace anything. The app recognizes the edges of the object and captures it seamlessly.

3. A next-generation escape room

Escape rooms are fun, but you have to travel to select locations to enjoy them. However, with an app that scans your surroundings and determines where to project the rooms and sections of the walls, you can enjoy the experience even in your own home. ARia’s Legacy is one such app, and Lidar technology prevents the app from drawing borders or pathways for the escape room in unreachable places.

4. Bringing a new level of immersion to a childhood game

Games based on AR have already proven their worth on the market (think Pokemon Go and Wizards Unite), but Lidar makes them even better. For example, the popular children’s game “The Floor is Lava” has been reimagined in the Hot Lava app, which precisely measures and identifies the floor of a particular setting, and projects lava onto it, while leaving high objects and surfaces untouched.

So what does it all mean for AR?

As we have mentioned, built-in algorithms in AR apps can already analyze visual settings, but Lidar takes the process to the next level and leaves little room for imaging errors and discrepancies. All of this amounts to a more immersive and enjoyable experience for users. While the technology is currently supported on only two devices, all signs point to Apple continuing to invest in AR technology (including integrating it into Apple TVs and a rumored future headset).

Lidar may be nothing more than a trend and optional improvement at the moment, but if it makes its way to all forthcoming Apple devices, we could see billions of people enjoying everything advanced AR has to offer. A game-changing impact.

Photo credit; Apple.com

Creator; Mikhail Scherbatko, creative writer for Program-Ace, an award-winning IT company with a 26+ year track record of success in AR app development.